Sometimes a company does something that makes you really really happy. Microsoft did this when they delivered Windows Home Server. HP did this when they released the HP MediaSmart Server. I finally had a sweet solution to having ALL my home machines silently backed up every day, a highly available set of simple file shares for storing data, NO Drive letters to remember, the ability to add storage to a pool by just plugging in a new hot swappable drive, and selectable share duplication. All this in with:

- a very tiny box

- ultra low power

- hot-swappable drive bays

- manages my printers

- has GB networking

- lets me to log in from the road and access my stuff

- shuts itself off at night and turns back on in the morning

- makes almost no noise sitting on my desk.

- has lots of USB ports for charging stuff

- runs my weather station

- serves all my music and photos through iTunes, Windows Media Player, or to me over the web.

I should add that the user management in this system is so much simpler than managing a Server Domain. Just add user accounts on the server that match the names of your LOCAL USER accounts on your home PCs and from that point on, the server takes care of syncing password changes. There’s no need for having home users log into and manage domain accounts like you’d have to do with a real domain Server.

So, for the uninitiated, WHS used a pretty sweet technology referred to as their Drive Extender which essentially Pooled the storage disks into what looked like one huge disk. It protected your files by ensuring that all files exist on at least 2 physical drives at all times. So, if a drive dies, you remove it, and plug in a new one whenever you get a chance and the DE takes care of all the rest. I’d like a reliable DE replacement, not that I’m totally bent on being able to mix different size drives, but because it’s relatively painless. RAID is not painless and needs experienced people to maintain them.

So what if the media streaming didn’t really work that well, and the iTunes server used to work pretty well (not so much anymore), and the daily image backups were awesome in how they only required enough storage to account for the changed data. Fortunately Windows Home Server 2011 doesn’t eliminate that…

Enter 2010, Microsoft was developing the next version internally named Vail which should have extended this awesomeness even further by basing the core on 64bit Server 2008 R2 instead of 32bit Server 2003. I was so happy, thank you Microsoft.

Then apparently they Forgot The Whole Point of WHS and decided to remove the Drive Extender. Hence, no more simple share duplication, no more Plug-in a new drive if you need more space in the pool, etc.. Now, they aren’t saying what’s going to replace it, but by default, it’s probably going to be back to Hardware RAID or just take your own chances about drive failure.  I felt a bit hosed by this.  I really wanted to upgrade and keep the purely Share based disk pooling system. Don’t get me wrong, I love Microsoft Technology, most of the time I just despise the decision making process there. What irks me is the dishonesty here. They said that this decision is a result of Customer and Partner feedback. This is so not true. They may have talked to their customers, but weren’t listening as there is simply no way the WHS end users said anything even remotely like “it’s ok to go ahead and get rid of DE, we hate it”.

So, Now I am unhappy. I won’t want to be switching to WHS without some form of DE replacement. Sure, it doesn’t HAVE to be DE, but does HAVE to be reliable and it needs to be coming from Microsoft.  That’s unfortunately not going to happen.  DriveBender does appear to solve this problem hopefully.

I’m going to be much more cautious about WHS promotion moving forward, but I still think it was one of the most underrated products in the past few years.  Shame on HP for not pushing back on the on the WHS Team and for discontinuing your great little media server boxes.  Granted, the did continue to produce the HP Proliant Microserver line, but these are a little larger, louder, eat a bit more power and don’t have hot-swap bays.

Where to now?

Well, what options do I have. Well, I could just buy another EX495 as a backup and keep using WHS v1 for the next 5 or 6 years as is.

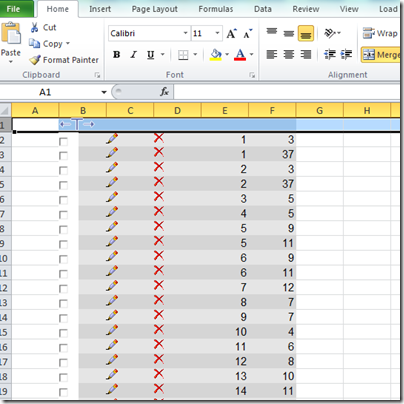

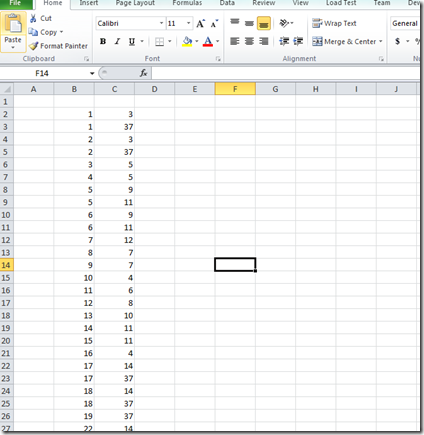

I could buy a Drobo box for a ton more coin, but this only solves part of the data protection problem and requires me to use up tons of disk space since you can’t selectively enable duplication like you used to be able to do with DE. Drobo doesn’t even come close to providing anything like the super-easy automated network-wide system backups. Remember, WHS uses a fraction of the disk space any other backup system does because of the sharing/reusing of backed up file clusters. ie: a ‘file’ backed up on one machine is shared across all backups of all machines and future backups if it’s identical. Some backup programs manage something like using incremental backups of a single machine, but not shared across the whole home network of machines! My server has over 100 complete backups instantly browsable for our 7 PCs over a period of 3 years and occupies less than 900gb.  Drobo can’t help here and is a Linux based platform, so you pretty much have to be a geek to get apps running/configured there, although it’s not terrible. My mom won’t be doing this anytime soon however, so it’s not yet simple enough.

I could use Drobo as the file system and WHS on the EX495, but now I have two boxes twice the power, more than twice the noise/heat, etc.. no thanks, not plug/play like WHS V1.

I suppose sometime before the Ex495 dies I’ll build a new quiet low power machine tuned for this task and upgrade to Windows Storage Server 2012. Â We’ll see what the future holds…